I’m attending the 2012 Data Science Summit and I am happy to report it has been well worth my time. It isn’t a nuts and bolts confernce on what technologies to use or how to use them, what processes you should work or which machine learning algos to apply in a situation. What there are is presentations and panels on topics around working with data that apply directly to mich of the work I do.

Category: Uncategorized

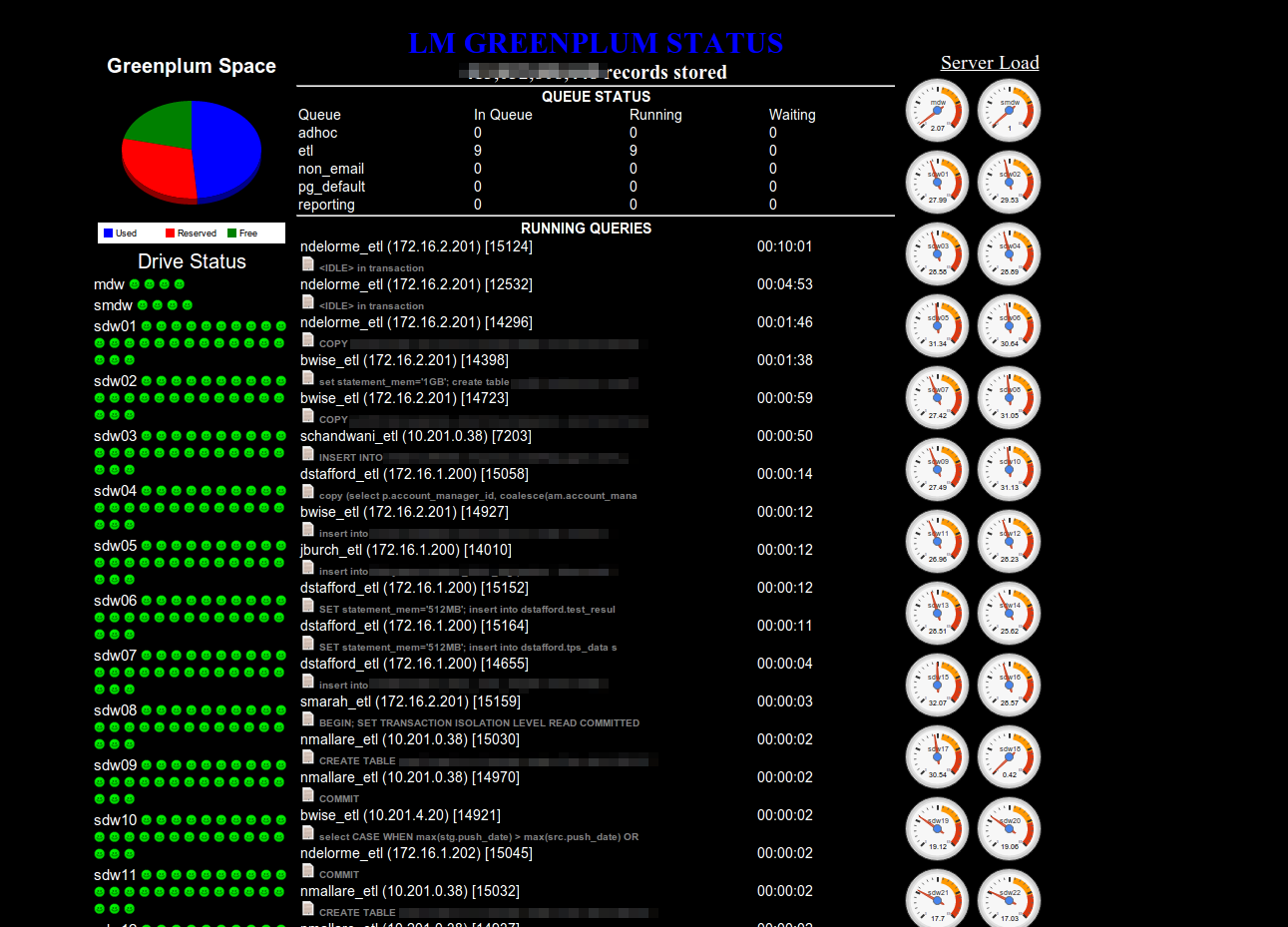

Dashboarding Greenplum

When Greenplum first landed in our shop they had wanted us to use gpperfmon. It quickly because obvious that it wasn’t stable at that time and that it created way to much overhead. So a couple years ago I came up with my own dashboarding tools that rely on the database as little as possible and exists outside of the cluster. My thought being that if they cluster is down it pretty hard to trouble shoot what’s wrong with it when the stats are kept in the cluster itself. The tool I came up with blends some Greenplum query checks, with sar data and uses MegaCLI to pull disk health. Here’s a quick glimpse so you can get an idea of what I’ve got going.

Went to drop and update on the website and it is in all kinds of pain. Looks like there is some work to be done

Falling behind

The gpadmin site is sorely lacking in updates recently. That’s not to say I don’t have things to post about. Just haven’t had the time to make a reasonable post about them. Look for some updates soon.

I use this to figure out what’s going happening on the nodes and if I might have hanging queries on one of them

gpssh -f hosts.seg

=> ps -ef | grep postgres | grep con | awk '{print $12}' | sort | uniq -c; echo "===="

[node02] 8 con5397

[node02] 12 con5769

[node02] 9 con5782

[node02] 4 con5989

[node02] ====

[node04] 8 con5397

[node04] 12 con5769

[node04] 8 con5782

[node04] 4 con5989

[node04] ====

[node03] 8 con5397

[node03] 12 con5769

[node03] 8 con5782

[node03] 4 con5989

[node03] ====

[node01] 8 con5397

[node01] 12 con5769

[node01] 8 con5782

[node01] 4 con5989

[node01] ====

=>

GP 4.0.5.4 out

Greenplum HD Announced

Reading the news on the Greenplum HD announcement. I find it especially interesting because one of the main reasons I had an initial flurry of posts here and then trailed off was that I got heavily involved in our Hadoop installation and restructuring it. We’re currently using Cloudera‘s Hadoop packages and the way they handle distribution their software is about as good as you can get. I’m interested to see how Greenplum’s version of the software works. I’d heard talk of a couple of Map Reduce implementations at startups that were seeing impressive performance improvements. In a large enterprise there is definitely a place for both Hadoop and an MPP database and the trick is getting them to share data easily, which is why I was very impressed to see the 4.1 version of Greenplum with the ability to read from HDFS.

The big question is how well is Greenplum going to be able to support the release going forward. Greenplum is based off an older version of Postgres and I get a monthly question from someone about some feature that is in a later version of Postgres that doesn’t seem to be in Greenplum. Is their Hadoop implementation going to get the same treatment? Will Greenplum be able to keep up with the frequent changes to the Hadoop codebase and keep their internal product up to date, will it even really matter?

One extremely interesting thing we should see over the next year is a push on how to integrate EMC SAN architecture into both Greenplum and Hadoop. The old pre-EMC Greenplum sounded much like what I’ve heard from my Cloudera interactions, “Begrudgingly we see a use case for SAN storage, but might I suggest instead you cut off your left arm and beat yourself to death with it first.” I realize we’re working with really big data here so looking at SAN storage seems insane at first. Once you get into managing site to site interactions, non-interruptive backups and attempting to keep consistent IO through put across dozens if not hundreds of not only servers but different generations of servers, you can see the play.

I’m looking really long look at Flume right now and that’s a key feature that Cloudera implementation will have over what I’ve seen from Greenplum. The fault tolerant Name Node and Job Tracker look interesting but I don’t see these as very high risks in the current Hadoop system and as I understand they are already in the process of being addressed in core Hadoop. Performance promises are “meh”, I don’t take any bullet point that says X times speedup seriously. It could be true, but you really need a good whitepaper to backup a speed improvement boast.

So for me the jury is still out. It looks cool and I can’t wait to actually use it, but I said the same thing about Chorus a year ago and we still haven’t been approached with a production ready version of it.

GP 4.0.5.0 is out

plugin action

move data from db to db

We had two Greenplum instances running and we needed to copy one big table to the other. There’s a variety of ways to dump and import but we were moving a large amount of data, so the thought of dropping it to a local file was not a very good option.

One of the ways to move a table from one instance to the other using COPY commands. If you are on the destination database you would use:

psql -h remotegreenplumtopulldatafrom -U me -c "COPY myschema.mytable to STDOUT" | psql -c "COPY myschema.mytable FROM STDIN"

We were going to move a lot of data though. So I came up with a quick script to do it a partition at a time

#!/usr/bin/perl

################################

# database_dump

#

# Simple program do go out and copy dated partitions of

# a greenplum db to another db where the table exists

# it starts at start_time and goes incrementally backwards

# a day at a time until it gets to end_time

#

# 2010-03-16 SHK

################################

use strict;

use warnings;

use POSIX;

use Time::Local;

# Fields are S,M,H,D,M,Y - Note month is 0-11

my $start_time = timelocal(0,0,0,1,2,2011);

my $end_time = timelocal(0,0,0,1,2,2010);

my $cmd;

my $running_date = $start_time;

until ( $running_date < $end_time ) {

my $part_date = strftime("%Y%m%d",localtime($running_date));

$running_date -= 86400

$cmd = qq~psql -h remotegreenplumtopullfrom -U skahler -c "COPY myschema.mytable_1_prt_$partdate to STDOUT" | psql -c "COPY myschema.mytable FROM STDIN"~;

print "$cmd\n";

system($cmd);

}

exit;

In our case the new db didn't have much action going on while I was moving the data in. I think if it was an active table that I was moving things into I'd create create a table on the destination target, load the data into it and then exchange that partition into the my target table.