One of our clusters we tried out used C2100s and these LSI 9260-8i controllers. They ran fantastically out of the gate, but we started to run into some issues. I can attest to the fact that our rack of servers ran hot. In pulling an over nighter it would get cold in the facility and I’d stand behind that rack because the heat coming out of over a dozen servers with a dozen disks running and processors running full bore kept my fingers from cramping up. This wasn’t heat senor warning hot, but it definitely put out some warmth. Later on when we started to have issues we’d pull the controller out and find the heat sink sitting on top of the card not attached in any way. What I think the problem was is that these controllers have the heat sink held on by plastic clips and springs and as our servers would run warm, not to the sensor heat warning level, eventually the clips would melt leaving the heatsink sitting there on top of the controller.

Year: 2012

Benchmarks for R510 Greenplum Nodes

gpcheckperf results from hammering against a couple of our R510s. The servers are setup with 12 3.5 600GB 15k SAS6 disks split into four virtual disks. The first 6 are one group and 50GB is split off for an OS partition and the rest dropped into a data partition. The second set of six disks are setup in a similar fashion with 50GB going to a swap partition and the rest going to another big data partition. No Read Ahead, Force Write Back and a Stripe Elements Size of 128KB. Partitions formatted with XFS and running on RHEL5.6.

gpcheckperf results from hammering against a couple of our R510s. The servers are setup with 12 3.5 600GB 15k SAS6 disks split into four virtual disks. The first 6 are one group and 50GB is split off for an OS partition and the rest dropped into a data partition. The second set of six disks are setup in a similar fashion with 50GB going to a swap partition and the rest going to another big data partition. No Read Ahead, Force Write Back and a Stripe Elements Size of 128KB. Partitions formatted with XFS and running on RHEL5.6.

[gpadmin@mdw ~]$ /usr/local/greenplum-db/bin/gpcheckperf -h sdw13 -h sdw15 -d /data/vol1 -d /data/vol2 -r dsN -D -v

==================== == RESULT ==================== disk write avg time (sec): 85.04 disk write tot bytes: 202537697280 disk write tot bandwidth (MB/s): 2275.84 disk write min bandwidth (MB/s): 1087.34 [sdw15] disk write max bandwidth (MB/s): 1188.50 [sdw13] -- per host bandwidth -- disk write bandwidth (MB/s): 1087.34 [sdw15] disk write bandwidth (MB/s): 1188.50 [sdw13] disk read avg time (sec): 64.67 disk read tot bytes: 202537697280 disk read tot bandwidth (MB/s): 2987.98 disk read min bandwidth (MB/s): 1461.30 [sdw15] disk read max bandwidth (MB/s): 1526.68 [sdw13] -- per host bandwidth -- disk read bandwidth (MB/s): 1461.30 [sdw15] disk read bandwidth (MB/s): 1526.68 [sdw13] stream tot bandwidth (MB/s): 8853.81 stream min bandwidth (MB/s): 4250.22 [sdw13] stream max bandwidth (MB/s): 4603.59 [sdw15] -- per host bandwidth -- stream bandwidth (MB/s): 4603.59 [sdw15] stream bandwidth (MB/s): 4250.22 [sdw13] Netperf bisection bandwidth test sdw13 -> sdw15 = 1131.840000 sdw15 -> sdw13 = 1131.820000 Summary: sum = 2263.66 MB/sec min = 1131.82 MB/sec max = 1131.84 MB/sec avg = 1131.83 MB/sec median = 1131.84 MB/sec

Testing out UAC

Finding trouble spots

I’ve been fighting some database performance issues recently and started to use the following query to look for tables that are being used that are showing that they have 0 rows. These are likely to be unanalyzed tables being used in queries. We have queries to look through the entire database for potential unanalyzed tables, but it takes much less resources just to look at what’s currently in flight and try to address what we are currently hammering on. There are a lot of other table in there that I don’t need to join on for the visible data set, but I’ve got them there in case I need to start ungrouping things and pulling in more specific data.

I’ve been fighting some database performance issues recently and started to use the following query to look for tables that are being used that are showing that they have 0 rows. These are likely to be unanalyzed tables being used in queries. We have queries to look through the entire database for potential unanalyzed tables, but it takes much less resources just to look at what’s currently in flight and try to address what we are currently hammering on. There are a lot of other table in there that I don’t need to join on for the visible data set, but I’ve got them there in case I need to start ungrouping things and pulling in more specific data.

SELECT n.nspname AS "schema_name",

c.relname AS "object_name",

c.reltuples,

c.relpages

FROM pg_locks l,

pg_class c,

pg_database d,

pg_stat_activity s,

pg_namespace n

WHERE l.relation = c.oid

AND l.database = d.oid

AND l.pid = s.procpid

AND c.relnamespace = n.oid

AND c.relkind = 'r'

AND n.nspname !~ '^pg_'::text

AND c.reltuples = 0

GROUP BY 1, 2, 3, 4

ORDER BY 1, 2;

Data Science Summit 2012

I’m attending the 2012 Data Science Summit and I am happy to report it has been well worth my time. It isn’t a nuts and bolts confernce on what technologies to use or how to use them, what processes you should work or which machine learning algos to apply in a situation. What there are is presentations and panels on topics around working with data that apply directly to mich of the work I do.

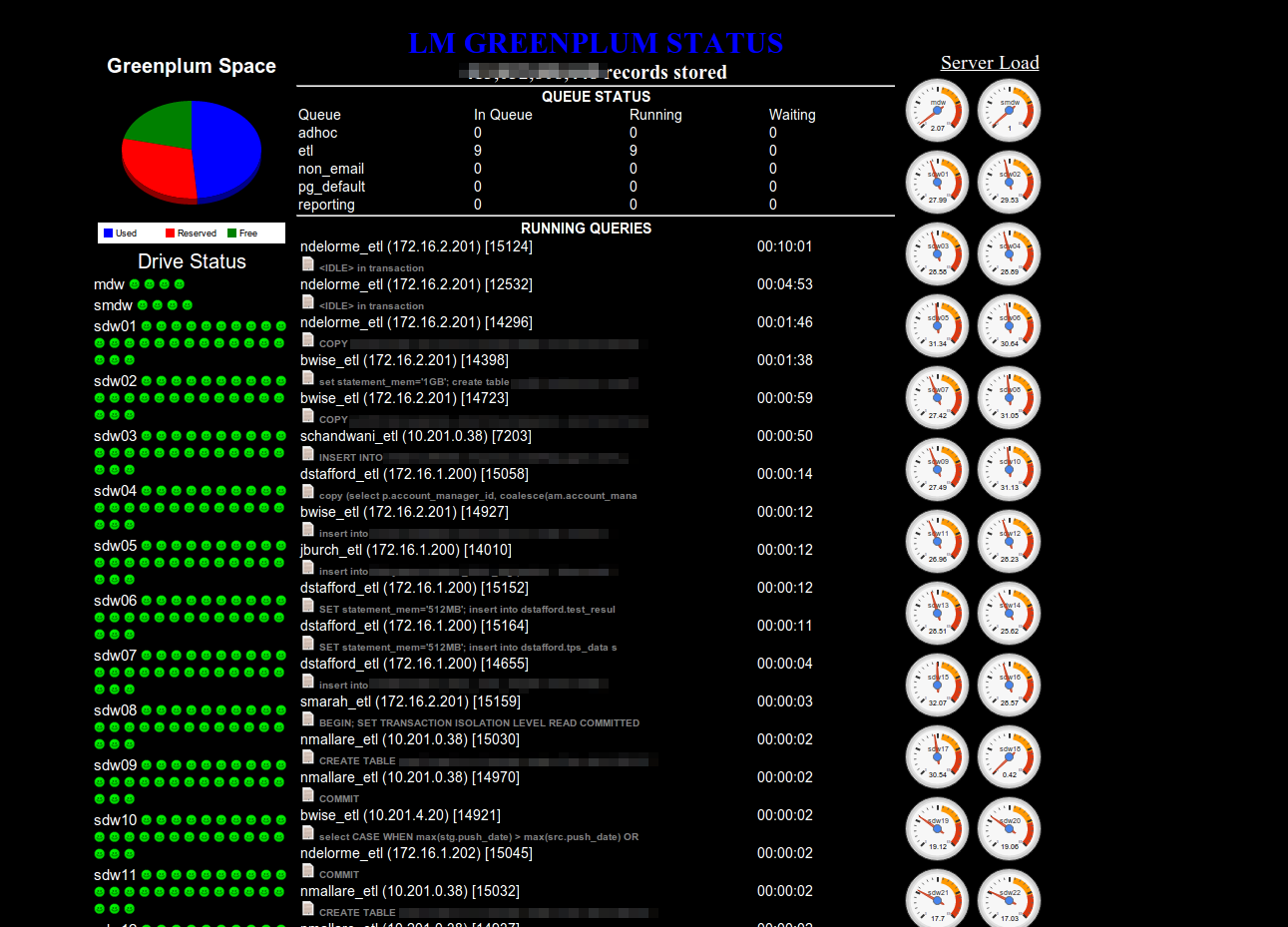

Dashboarding Greenplum

When Greenplum first landed in our shop they had wanted us to use gpperfmon. It quickly because obvious that it wasn’t stable at that time and that it created way to much overhead. So a couple years ago I came up with my own dashboarding tools that rely on the database as little as possible and exists outside of the cluster. My thought being that if they cluster is down it pretty hard to trouble shoot what’s wrong with it when the stats are kept in the cluster itself. The tool I came up with blends some Greenplum query checks, with sar data and uses MegaCLI to pull disk health. Here’s a quick glimpse so you can get an idea of what I’ve got going.

Went to drop and update on the website and it is in all kinds of pain. Looks like there is some work to be done

Falling behind

The gpadmin site is sorely lacking in updates recently. That’s not to say I don’t have things to post about. Just haven’t had the time to make a reasonable post about them. Look for some updates soon.